AI just killed the hardest part of video marketing. Hooks. Pacing. Energy. (Topview)

- Braden Barty

- 3 days ago

- 5 min read

You know what's wild? We spent the last few years learning , CapCut, Premiere, and DaVinci Resolve...

...only to discover the real skill was pattern recognition.

Enter: Topview AI Video Agent (powered by OpenAI's Sora 2).

This thing doesn't just edit videos. It's basically a digital pickpocket that steals attention patterns from the internet and repurposes them for your content.

Think of it like this: Remember when your mom said "if your friends jumped off a bridge, would you?"

Topview AI says "YES—and here's the exact bridge, the optimal jump angle, and three variations of the landing."

What it actually does:

Reverse-engineers viral videos down to the frame

Identifies the neurological trigger points that made people stop scrolling

Recreates that same dopamine architecture for YOUR content

Without you needing to understand why a 0.3-second pause at second 4 converts 40% better

It's like having a video editor who's watched 10 million TikToks and actually remembers what worked.

This is massive if you're a marketer or creator who's tired of playing "guess what the algorithm wants today."

⸻

But Wait—Isn't Sora 2 Just a "Video Generator"?

Here's where it gets interesting.

Sora 2 started as OpenAI's text-to-video model (the thing that makes AI videos from scratch). But the real power move? It shifted into editing mode.

Here's how it works as an editor:

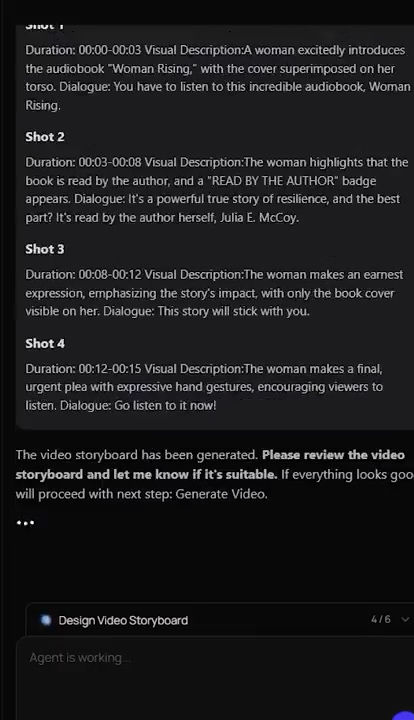

You upload your actual footage (up to 5 seconds initially, extendable) as an "input reference." Then you hit it with a targeted text prompt like:

"Remix: accelerate pacing by 20%, add viral hook beat-sync at 0.3s pause, swap background to neon energy spikes."

Sora 2 then:

Preserves your structure, motion, framing, and "world state" (fancy AI term for "keeps you looking like you")

Tweaks specific elements—lighting, actions, audio sync, CTAs

Regenerates targeted segments with scary-good accuracy

Outputs a polished MP4 in one pass

Translation: It's not timeline scrubbing in Premiere. It's regenerative editing.

Need to fix a lip-sync glitch? Done. Want to swap your outfit? Done. Need three variants with different energy levels? Done, done, done.

⸻

The Face Preservation Magic (Why You Don't Look Like a Deepfake)

Here's the part that actually matters if you're putting YOUR face on the internet:

Step 1: Create Your Digital "Cameo"

Record a short calibration video in Topview (basically reading prompts so it can capture your voice, facial movements, and expressions). This creates a reusable digital profile that locks in:

Your exact face structure

Natural expressions

Skin tone

Voice patterns

Movement style

Step 2: Upload Your Hook Footage

Feed in your raw video. Sora 2 analyzes it and regenerates while keeping your facial features and movements identical (90% lip-sync accuracy—which is honestly better than some TikTokers achieve naturally).

Step 3: Edit Via Text Prompts

Tell it what to change:

"Speed up hook 20%, neon background, same face energy"

"Add beat-sync pause at 0.3s, darker lighting"

"Three variants: calm, hyped, mysterious"

What stays the same: Your face. Your expressions. Your energy.

What changes: Pacing, background, lighting, CTAs, rhythm.

Your face doesn't morph. It doesn't get replaced. It doesn't turn into some uncanny valley nightmare.

Sora 2 maintains "temporal coherence" and "object permanence"—nerdy AI terms that basically mean you stay YOU while everything else adjusts around you.

Tools like Topview AI and Higgsfield layer on top of this, letting you match angles and lighting from viral videos seamlessly while keeping your face locked in.

⸻

The Creator Workflow in Real Life:

Record your calibration video once (5 minutes, max)

Shoot raw TikTok footage of yourself pitching a product

Feed it to Topview with remix prompts

It analyzes rhythm and hooks from viral references

Maintains your face and motions consistently

Exports 3-5 variants for A/B testing

No manual keyframing. No "let me just adjust this one frame for 40 minutes."

You record once. AI handles the rest.

⸻

Here's how this actually gets used 👇 No fluff. No theory. Just the playbook.

⸻

Use Case #1: The Marketer (a.k.a. "I Need 47 Ads By Tuesday")

Goal: Sell more without becoming a full-time TikTok actor.

Step-by-step:

1. Find a winning video Scroll TikTok/Reels in your niche. Find something with 500k–5M views that makes you think "damn, I wish I made that."

2. Feed it to Topview AI like a hungry robot The AI dissects:

Hook structure (those critical first 2 seconds)

Scene pacing (why they didn't scroll)

Visual rhythm (the editing beats)

Energy spikes (when viewers lean in)

3. Swap the product (while keeping YOUR face) Upload YOUR product video with your face. Same psychological architecture. Different offer. Same you.

It's like putting new wine in a bottle that already

sold millions—except the sommelier is still you.

4. Export 3–5 variants Test different:

Hook wording

CTAs

First frames

Energy levels

Backgrounds

All with your face perfectly preserved.

5. Launch everywhere TikTok. Reels. Shorts. Paid ads. Your aunt's Facebook.

Result (hypothetical but realistic):

1 editor → 10 ads/day instead of 2

Faster testing = faster learning

Lower CAC because you're borrowing proven attention patterns

Your face on every single one—building brand recognition

Marketing is math. This tool just gave you a calculator that remembers what you look like.

⸻

Use Case #2: The Creator (a.k.a. "I'm One Burnout Away From Selling Feet Pics")

Goal: More views without the existential dread of daily content.

Step-by-step:

1. Pick a viral format Examples:

"3 mistakes killing your _____"

"Nobody tells you this about _____"

"POV: You finally understand _____"

2. Run it through Topview AI It recreates:

Hook cadence (the rhythm that hooks)

Jump cut timing (the pace that keeps people)

Visual beats (the patterns that please monkey brain)

Energy flow (the vibe that converts)

3. Record once Just you. Talking. Into a camera. Like a normal human who's had coffee.

4. Let AI do the heavy lifting Auto pacing. Auto structure. Auto polish.

Your face stays consistent across all variants—no weird morphing, no uncanny valley vibes.

5. Publish daily Consistency without therapy bills.

Result:

You stop guessing what works

You start compounding results

Algorithm rewards you like a slot machine that actually pays out

Your audience recognizes YOU in every video

⸻

The Reality Check (Because I'm Not a Hype Beast):

Topview AI is powered by Sora 2, which means realistic motion and regenerative editing. But let's be honest about the limitations:

❌ Short clips only – We're talking 5-20 second segments, not 20-minute YouTube deep dives ❌ No deep timeline control – This isn't replacing Premiere Pro's granular editing ❌ 90% lip-sync accuracy – Which means 10% of the time, your mouth might look like it's chewing invisible gum ❌ Watermarks on free tier – Because nothing in life is truly free (except disappointment)

But here's the thing:

You don't need perfection. You need volume at quality.

And 80% of scroll-stop power lives in the first 3 seconds anyway.

This tool systematizes "attention patterns" at scale. It doesn't replace your creativity—it multiplies your output without requiring you to become an editing monk.

It fits perfectly with tools like Dzine.ai and Kling.ai: creation gets cheaper, attention stays expensive, and smart marketers win by adding volume.

⸻

Why this actually matters (beyond the hype):

👉 Creation is getting absurdly cheap 👉 Attention is still expensive as hell

Topview AI lives in the sweet spot:

Less time creating

More time distributing

More shots on goal

Your face building brand equity across every test

It's not about replacing creativity. It's about not wasting creativity on stuff that can be systemized.

Record once. Remix for Reels, TikTok, and Shorts. Test fast. Win faster.

⸻

Bottom line:

If you're still "winging it" with hooks and pacing, you're basically doing powerlifting without watching a single form video.

Sure, you might get strong...

...but you'll probably just hurt your back and wonder why everyone else is getting results faster.

The new playbook:

Steal what already works

Let AI be your spotter

Add volume

Win

That's it.

No guru courses. No "secret sauce." Just pattern recognition at scale.

Welcome to 2026, where the robots don't steal your job—they just make you significantly better at it.

Comments